How to Improve Your Website’s Technical SEO

Table of Contents

Toggle

Picture resource: Getty Images

Technological Website positioning involves both equally marketing and advertising and enhancement skills, but you can complete a good deal without the need of getting a programmer. Let us go by the techniques.

When you invest in a new auto, you only have to have to push a button to switch it on. If it breaks, you choose it to a garage, and they resolve it.

With web page fix, it is not rather so simple, mainly because you can address a challenge in quite a few approaches. And a website can function completely fine for end users and be bad for Web optimization. That’s why we have to have to go under the hood of your web site and appear at some of its tech elements.

Specialized Search engine optimization addresses server-relevant, site-similar, and crawler concerns. Image resource: Writer

Overview: What is specialized Website positioning?

Specialized Web optimization refers to optimizing your web page architecture for lookup engines, so they can optimally index and score your articles. The architecture pillar is the foundation of your Web optimization and usually the initial action in any Web optimization job.

It seems geeky and complex, but it is just not rocket science. But to satisfy all the Search engine optimization needs, you may well have to get your arms dirty or phone in your webmaster all through the approach.

What comprises specialized Search engine marketing?

Specialized Web optimization includes three spots: server-similar optimizations, webpage-connected optimizations, and crawl-connected challenges. In the adhering to, you will uncover a complex Search engine optimisation checklist which includes crucial aspects to enhance for a smaller web site.

1. Server-associated optimizations

A search engine’s main aim is to supply a wonderful user encounter. They optimize their algorithms to send out end users to good web sites that reply their thoughts. You want the exact same for your customers, so you have the similar objective. We will seem at the next server-similar options:

- Area identify and server settings

- Protected server settings

- Most important area title configuration

2. Web site-relevant optimizations

Your web page is hosted on the server and contains web pages. The next segment we are likely to appear at for complex Search engine marketing is composed of problems associated to the webpages on your site:

- URL optimizations

- Language settings

- Canonical settings

- Navigation menus

- Structured facts

3. Crawl-associated difficulties

When server and web page troubles are corrected, search engine crawlers may perhaps still operate into obstructions. The 3rd section we appear at is related to crawling the internet site:

- Robots.txt guidance

- Redirections

- Broken one-way links

- Web page load pace

How to improve your website’s technological Website positioning

The most economical way to enhance Web optimization internet site architecture is to use an Search engine optimisation tool called a site crawler, or a “spider,” which moves by way of the net from backlink to backlink. An Search engine optimisation spider can simulate the way look for engines crawl. But to start with, we have some preparation work to manage.

1. Correct area identify and server configurations

Before we can crawl, we have to have to determine and delimit the scope by adjusting server configurations. If your site is on a subdomain of a company (for example, WordPress, Wix, or Shopify) and you will not have your individual area identify, this is the time to get 1.

Also, if you have not configured a safe server certificate, acknowledged as an SSL (secure sockets layer), do that also. Search engines treatment about the security and trustworthiness of your site. They favor secure web-sites. Equally area names and SSL certificates are small cost and substantial worth for your Website positioning.

You have to have to come to a decision what edition of your area identify you want to use. Many people today use www in entrance of their area name, which simply just indicates “planet broad world-wide-web.” Or you can choose to use the shorter variation with no the www, only area.com.

Redirect searches from the edition you are not utilizing to the 1 you are, which we phone the main area. In this way, you can keep away from duplicate articles and deliver constant signals to both of those customers and research motor crawlers.

The exact goes for the secure variation of your website. Make absolutely sure you are redirecting the http version to the https model of your primary area identify.

2. Validate robots.txt options

Make this small check to validate your domain is crawlable. Sort this URL into your browser:

yourdomain.com/robots.txt

If the file you see employs the phrase disallow, then you happen to be likely blocking crawler access to parts of your site, or maybe all of it. Look at with the internet site developer to realize why they’re undertaking this.

The robots.txt file is a normally recognized protocol for regulating crawler access to internet sites. It truly is a text file placed at the root of your domain, and it instructs crawlers on what they are authorized to access.

Robots.txt is generally utilized to reduce accessibility to certain pages or whole internet servers, but by default, almost everything on your website is accessible to most people who is familiar with the URL.

The file provides generally two kinds of info: user agent details and let or disallow statements. A user agent is the name of the crawler, these as Googlebot, Bingbot, Baiduspider, or Yandex Bot, but most normally you will only see a star image, *, this means that the directive applies to all crawlers.

You can disallow spider accessibility to sensitive or copy data on your web page. The disallow directive is also extensively utilized when a web page is underneath growth. Sometimes developers ignore to take away it when the web-site goes are living. Make absolutely sure you only disallow pages or directories of your internet site which definitely should not be indexed by search engines.

3. Test for duplicates and worthless pages

Now let’s do a sanity check out and a cleanup of your site’s indexation. Go to Google and kind the next command: site:yourdomain.com. Google will present you all the pages which have been crawled and indexed from your website.

If your internet site doesn’t have a lot of pages, scroll via the list and be aware the URLs which are inconsistent. Appear for the following:

- Mentions from Google saying that particular internet pages are related and hence not revealed in the effects

- Pages which should not clearly show mainly because they convey no worth to buyers: admin pages, pagination

- Several pages with essentially the very same title and material

If you are not positive if a web page is helpful or not, look at your analytics application under landing pages, to see if the pages acquire any visitors at all. If it won’t seem suitable and generates no site visitors, it may possibly be most effective to remove it and allow other webpages surface as an alternative.

If you have several instances of the earlier mentioned, or the benefits embarrass you, take out them. In a lot of situations, look for engines are excellent judges of what need to rank and what shouldn’t, so really don’t invest also significantly time on this.

Use the following procedures to clear away web pages from the index but keep them on the web-site.

The canonical tag

For duplicates and close to-duplicates, use the canonical tag on the copy pages to indicate they are effectively the very same, and the other page should really be indexed.

Insert this line in the

area of the page:

If you are utilizing a CMS, canonical tags can usually be coded into the web page and generated automatically.

The noindex tag

For pages that are not duplicates but shouldn’t look in the index, use the noindex tag to take away them. It’s a meta tag that goes into the

segment of the page:

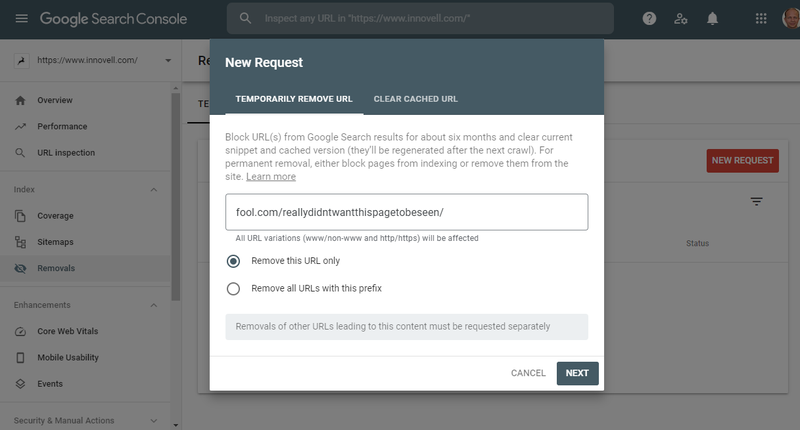

The URL removal resource

Canonical tags and noindex tags are revered by all research engines. Just about every of them also has several alternatives in their webmaster instruments. In Google Look for Console, it is attainable to clear away webpages from the index. Elimination is non permanent even though the other approaches are using effect.

You can rapidly remove URLs from Google’s index with the removal tool, but this method is non permanent. Impression supply: Writer

The robots.txt file

Be mindful utilizing the disallow command in the robots.txt file. This will only notify the crawler it are not able to visit the webpage and not to remove it from the index. Use it as soon as you have cleared all incriminating URLs from the index.

4. Crawl your internet site like a lookup motor

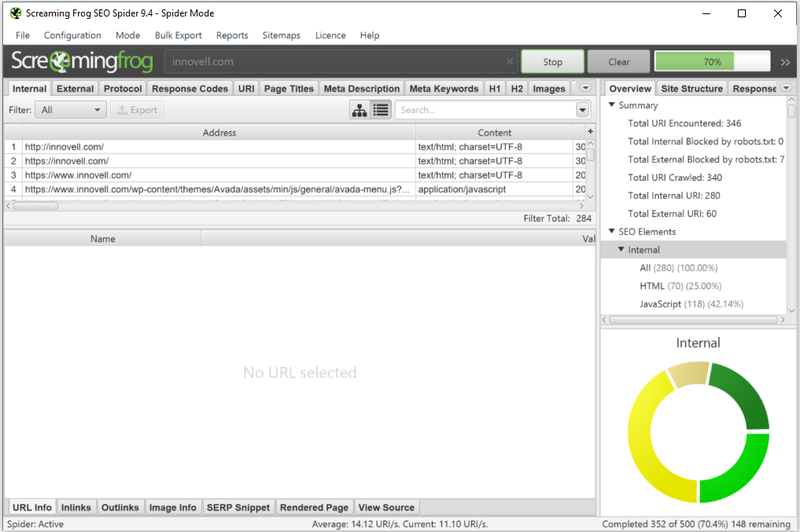

For the remainder of this complex Search engine optimisation run-by, the most effective future move is to use an Search engine optimization instrument to crawl your site by using the major area you configured. This will also support handle site-linked difficulties.

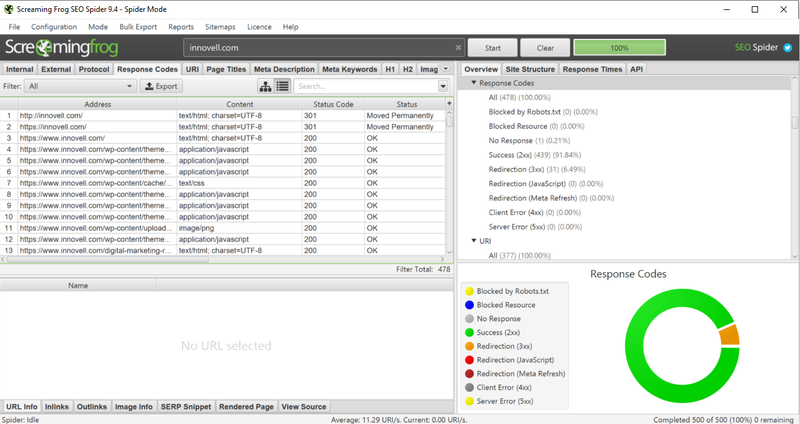

In the adhering to, we use Screaming Frog, web page crawl software that is free of charge for up to 500 URLs. Ideally, you will have cleaned your site with URL removals by means of canonical and noindex tags in step 3 ahead of you crawl your internet site, but it just isn’t necessary.

Screaming Frog is internet site crawler software program, no cost to use for up to 500 URLs. Picture source: Creator

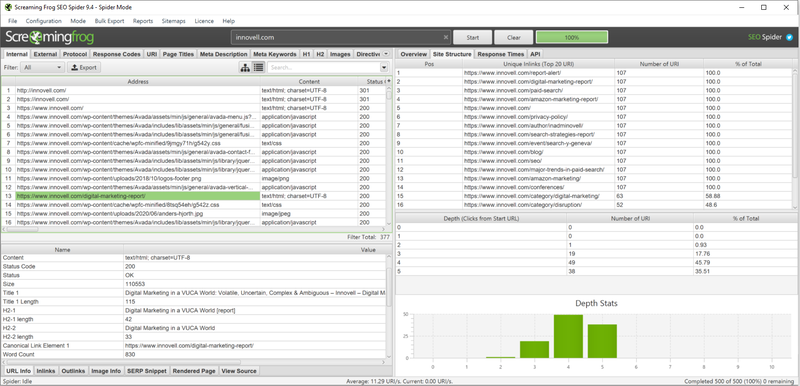

5. Confirm the scope and depth of crawl

Your very first crawl look at is to verify that all your webpages are staying indexed. After the crawl, the spider will present how several web pages it uncovered and how deep it crawled. Inside linking can enable crawlers obtain all your web pages a lot more simply. Make absolutely sure you have one-way links pointing to all your internet pages and mark as favorites the most essential webpages, in particular from the residence web page.

If you learn discrepancies concerning the range of webpages crawled and the number of web pages on your website, determine out why. Graphic source: Author

You can look at the amount of website web pages to the quantity identified in Google to test for regularity. If your overall web page is not indexed, you have inside linking or web-site map troubles. If as well many web pages are indexed, you might want to narrow the scope via robots.txt, canonical tags, or noindex tags. All very good? Let’s move to the up coming stage.

6. Right broken inbound links and redirections

Now that we’ve described the scope of the crawl, we can accurate mistakes and imperfections. The initial error sort is damaged backlinks. In basic principle, you shouldn’t have any if your web-site is managed with a Articles Administration Program (CMS). Damaged hyperlinks are one-way links pointing to a web page that no for a longer period exists. When a person or crawler clicks on the hyperlink, they close up on a “404” webpage, a server mistake code.

You can see the response codes created through a internet site crawl with Seo applications. Impression source: Author

We presume you will not have any “500” mistake codes, significant site problems that want to be set by the web page developer.

Subsequent, we look at redirections. Redirections sluggish down end users and crawlers. They are a signal of sloppy website growth or non permanent fixes. It is really fine to have a constrained quantity of “301” server codes, that means “Web page completely moved.” Internet pages with “404” codes are broken backlinks and need to be corrected.

7. Create title and meta information

Seo crawlers will discover a variety of other concerns, but a lot of of them are outside the scope of technological Web optimization. This is the case for “titles,” “meta descriptions,” “meta keywords,” and “alt” tags. These are all section of your on-web site Seo. You can deal with them afterwards considering that they require a large amount of editorial perform related to the written content rather than the composition of the site.

For the technological component of the web-site, detect any automatic titles or descriptions that can be developed by your CMS, but this needs some programming.

A different difficulty you could need to handle is the site’s structured facts, the more information you can insert into internet pages to move on to look for engines. All over again, most CMSes will include some structured information, and for a compact web page, it may not make a great deal of a difference.

8. Enhance site load speed

The last crawl situation you really should appear at is web page load pace. The spider will recognize slow webpages, generally induced by heavy photos or javascripts. Page velocity is anything research engines increasingly get into account for ranking reasons because they want stop consumers to have an exceptional practical experience.

Google has made a instrument identified as PageSpeed Insights which exams your website pace and would make suggestions to pace it up.

9. Confirm your corrections: Recrawl, resubmit, and check for indexation

At the time you have made key improvements based mostly on obstacles or imperfections you encountered in this technical Search engine optimization operate-by way of, you must recrawl your web page to check that corrections ended up applied effectively:

- Redirection of non-www to www

- Redirection of http to https

- Noindex and canonical tags

- Damaged backlinks and redirections

- Titles and descriptions

- Reaction instances

If every thing looks superior, you can want the internet site to be indexed by lookup engines. This will take place instantly if you have a very little endurance, but if you are in a hurry, you can resubmit important URLs.

Last but not least, after everything has been crawled and indexed, you really should be able to see the up to date webpages in search engine benefits.

You can also search at the protection part of Google Look for Console to find particulars about which webpages have been crawled and which had been indexed.

3 finest procedures when enhancing your technical Website positioning

Technological Search engine optimization can be mysterious, and it needs a lot of tolerance. Remember, this is the basis for your Search engine optimization functionality. Let’s glance at a few issues to keep in brain.

1. Will not concentration on rapid wins

It really is widespread Web optimization apply to concentration on brief wins: optimum impression with most affordable work. This approach might be useful for prioritizing specialized Seo tasks for bigger web-sites, but it’s not the greatest way to tactic a technical Search engine optimisation venture for a tiny web-site. You have to have to target on the extended time period and get your technical basis right.

2. Devote a small income

To enhance your Web optimization, you may perhaps require to devote a minor cash. Buy a area title if you don’t have one, get a secure server certification, use a paid out Search engine optimization instrument, or call in a developer or webmaster. These are most likely marginal investments for a enterprise, so you shouldn’t hesitate.

3. Request for guidance when you might be blocked

In technological Search engine marketing, it truly is ideal not to improvise or examination and understand. Anytime you are blocked, request for support. Twitter is complete of amazing and useful Search engine optimisation tips, and so are the WebmasterWorld message boards. Even Google presents webmaster business hrs classes.

Repair your Search engine optimisation architecture as soon as and for all

We’ve included the crucial areas of specialized Search engine optimization advancements you can carry out on your web page. Search engine optimisation architecture is a person of the 3 pillars of Website positioning. It is really the foundation of your Website positioning effectiveness, and you can deal with it after and for all.

An optimized architecture will profit all the operate you do in the other pillars: all the new material you build and all the backlinks you create. It can be demanding and just take time to get it all suitable, so get begun ideal absent. It is really the most effective detail you can do, and it pays off in the lengthy run.